Overview

Role

Product Designer

Industry / Product

Enterprise B2B AI Platform (NDA)

I worked on an enterprise B2B AI platform that enables developers to build, test, and deploy agent-based AI systems connected to high-risk domains such as HR and Finance.

When developers started building agent-based AI, they could tell something went wrong, but not why. So, the solution focused on building trust, explainability, and compliance.

Due to NDA, product names and visuals are abstracted. Contact me to view the presentation deck.

The Problem

Enterprise AI systems don’t fail loudly, they fail silently. As the platform evolved toward agentic AI, ensuring trust, explainability, and compliance became critical.

When we started building agent-based AI connected to HR and Finance systems, developers could tell something went wrong, but not why.

Failures felt like black-box errors:

Was the tool even called?

Which agent made the decision?

Where did the logic break?

Debugging meant guessing across chat logs, flow diagrams, and system traces.

My work focused on one critical question:

How do we make autonomous AI systems understandable enough for developers to trust?

The Strategic Decision

The strategic risk:

High Mean-Time-to-Debug

Low confidence in deploying agents to production

Slower platform adoption across enterprise teams

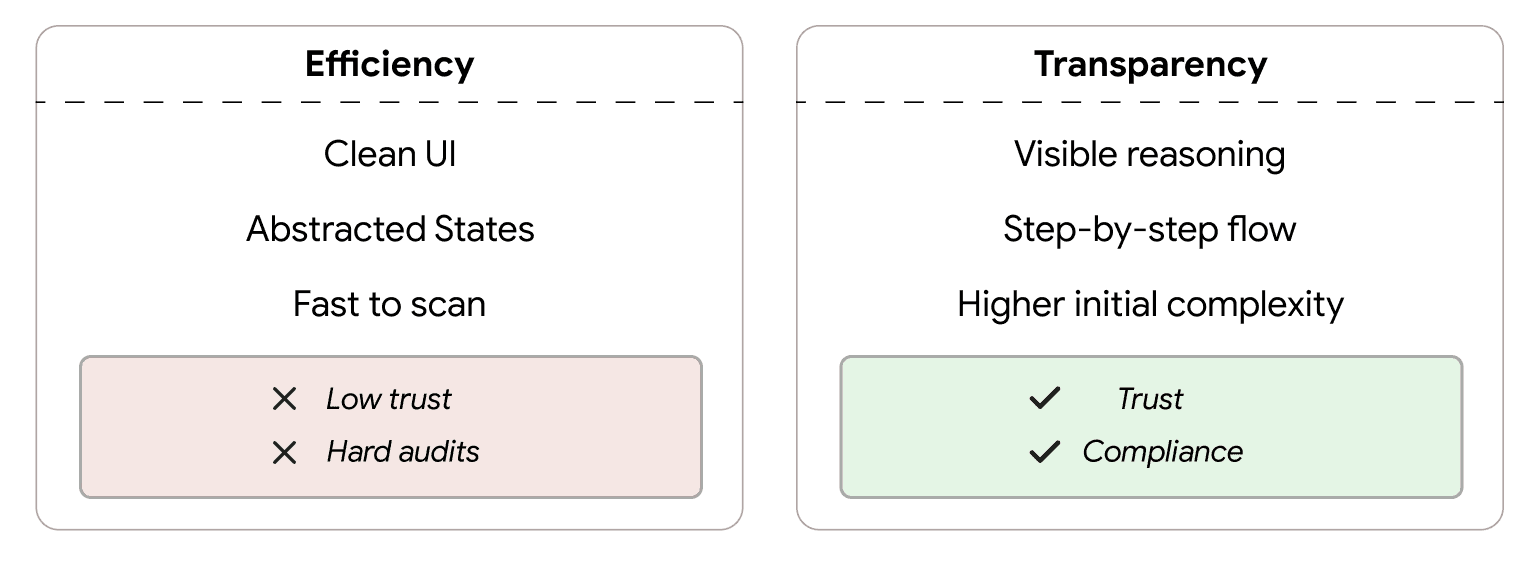

We explored simplifying the interface by hiding internal complexity. However, for enterprise systems connected to HR and Finance, trust and auditability outweighed surface-level simplicity.

We chose to design for transparent reasoning, despite the higher initial complexity.

My Role & Scope

My responsibility was a critical workflow within a large platform: the debugging experience inside the developer playground, where agents are tested and validated.

I partnered closely with the product manager, engineers and other designers to answer three important questions:

What reasoning should be visible?

How to expose failures without overwhelming users?

Where abstraction helped vs harmed trust?

Solution: Design Goal

Help developers understand what the agent did and why, before diving into low-level system details.

Key Design Decisions

Debugging as a trust problem

Treating failures as explainability gaps rather than tooling gaps.

Transparency over efficiency

Optimized for long-term confidence in enterprise environments.

Progressive disclosure

High-level reasoning first, deep logs when needed.

Designed for scale

Faster time to debug meant faster production time.

We designed a timeline-based debugging experience that:

Visualized agent handoffs step-by-step

Showed tool invocation and reasoning inline

Flagged failures at the exact point of breakdown

Allowed retrying from specific steps

This eliminated context-switching and made agent behavior explainable.

Impact

This work significantly improved the developer experience:

Mean-Time-to-Debug reduced by over 40%

Developer confidence increased when deploying agents

Platform adoption expanded from early beta users to multiple enterprise teams

The debugging experience became a key enabler for scaling agent-based AI across the platform.

Reflection

This project reinforced a few key lessons:

In enterprise AI, trust and explainability are prerequisites for adoption

Designing for developers (DX) directly improves end-user experiences (EX)

Clear mental models matter more than exposing every system detail

I’m happy to discuss further, feel free to connect!